March 4, 2025

Asked to imagine a factory of the future in 2021, Boeing predicted that “immersive 3D engineering designs will be twinned with robots that speak to each other” while “mechanics around the world will be linked” by XR headsets. Four years later and this vision isn’t far off from reality.

The use of robotics, especially in manufacturing, is nothing new, nor is the use of virtual reality (VR) for enterprise applications like training. Where these two technologies are converging, however, is another, still developing story.

We’re seeing the rise of several VR-robotics use cases, including:

- The use of VR to train robots

- The use of VR to operate/teleoperate robots and

- The use of robots to scan environments and capture information for immersive applications

INTRODUCTION

Robots have been in use for some time, mainly to perform automated repetitive tasks in controlled industrial environments. I say controlled because traditional robots are rigid and unable to adapt to change, which can put human workers, expensive machinery, etc. at risk.

In regards to VR, we’re interested in humanoid robots as well as robotic arms. To be more specific, we’re talking about humanoid robots that can carry out complex pre-programmed tasks, be operated remotely (especially in constrained, high-risk environments), adapt to changing conditions, and even learn for themselves.

TRAINING ROBOTS IN VR

Virtual reality can be used to teach robots to perform tasks. In this case, robots learn by observation; the human worker’s movements are used to essentially program the robot, serving as data to train robot AI models.

Take assembling a car door: The idea is that a human wearing a VR headset goes through the assembly steps and the robot learns by replicating the worker’s movements. The benefits are obvious: Not only can robots work for 24 hours per day; they can be used to eliminate human risk from hazardous tasks and environments.

Tesla provides a real-world example. In 2024, the company posted a job listing seeking someone to wear both a VR headset and motion-capture suit for “collecting and processing data” to fuel the development of the Tesla Bot (Tesla’s Optimus AI robot). The chosen candidate would be required to walk a pre-determined route and perform certain movements, presumably for a robot to learn the same route and movements.

Similarly, Toyota partnered with Ready Robotics to use Nvidia’s Omniverse platform to program industrial robots through simulation–“sim-to-real” robotic programming, specifically for Toyota’s aluminum hot forging production lines.

In this case, a human isn’t necessarily required to wear a VR headset. Instead, in what can be viewed as an application of digital twins, the robot learns the forging routine in a simulated production “work cell,” eliminating the need to work with real hot metal parts. In this way, Toyota can optimize the use case before deployment in live production.

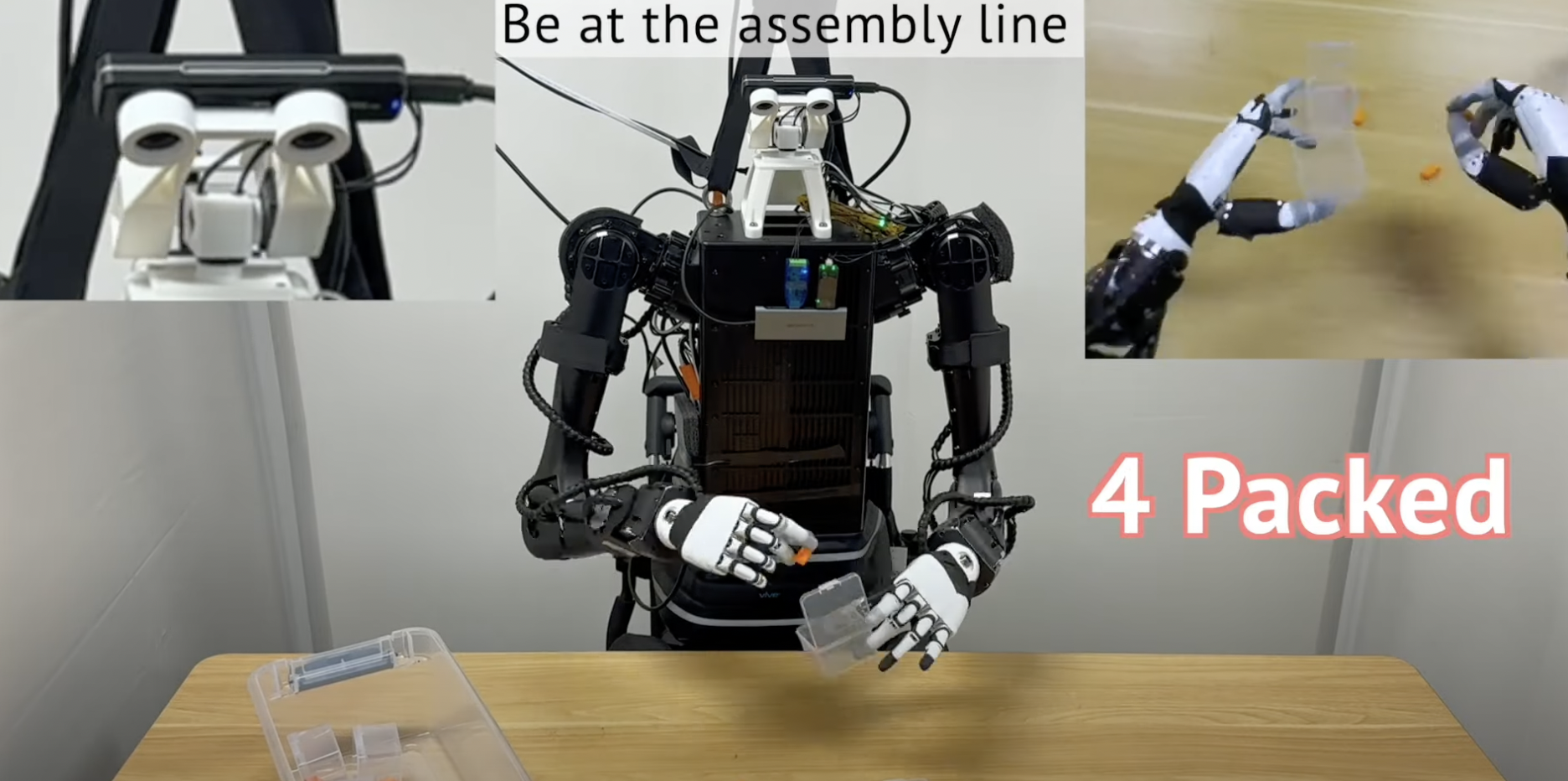

In another example, UC San Diego and MIT researchers have been working on Open-TeleVision, a system that uses Apple Vision Pro to teach robots to do chores like folding laundry. An XR headset allows the human operator to see from the robot’s POV while precise tracking enables the robot to follow the operator’s arm and hand movements.

It’s teleoperation for imitation learning, where the operator’s movements are used as data to train imitation learning algorithms. Repetition’s involved so that ultimately the robot can do the job without human intervention.

OPERATING ROBOTS IN VR / IMMERSIVE TELEOPERATION

VR teleoperation for hazardous work

VR teleoperation combines the precision of robotics with human expertise and improvisation, enabling robots to perform more complex tasks and human workers to guide hazardous work without putting themselves at risk.

Imagine the ability to defuse a bomb from a distance. It’s a dangerous job that can’t be fully automated, requiring human experience and oversight, and thus well-suited for a VR-operated robot. A VR headset with hand tracking and sensors/cameras on the robot are key: A trained, headset-clad professional simply moves his/her hands and the robot replicates the same motions in real time. Applications in sectors such as construction, logging, and mining are clear.

In this way, seeing through the robot’s eyes and controlling its arms, a human could fix a leak without physically entering a nuclear power plant, a surgeon could perform a high-stakes surgery with greater precision and, conceivably, a company could employ “less athletic” individuals for manual work.

That’s what JR West is doing. In 2024, the Japanese rail operator adopted a giant “robot torso attached to a crane arm-like appendage” to carry out maintenance work up to 40 feet above ground. The robot’s arms can hold and manipulate equipment weighing up to 88 pounds. A human operator wearing a VR headset to see from the robot’s POV adjusts the force of the robot’s grasp using special hand controls.

To begin with, the robot helped paint overhead equipment and clear trees that were obstructing train tracks. New hand attachments should expand its capabilities. Benefits include improved safety, higher productivity, and even a more inclusive workforce as operating a robot requires less strength.

Developers at the Institute for Human & Machine Cognition (IHMC) have been working on similar tech. A 2024 video shows IHMC’s humanoid robot Nadia boxing. Nearby, an engineer wearing a VR headset commands Nadia’s arms and legs with VR controllers.

Such a setup - like a VR-enabled remote monitoring station - could be used to deploy robots in more complex operations and dynamic work environments. Think disaster response, firefighting, etc.

It’s the best of both worlds: Robots keep people out of danger and shine in areas like precise measurement but direct teleoperation is available when necessary, allowing humans to make quick decisions as conditions change. IHMC says military, space exploration, and research organizations have expressed interest in Nadia’s development.

The National Safety Council (NSC) recently examined the role of robots in workplace safety and found that, indeed, remote-controlled robots (including VR-operated ones) offer high value especially for work in confined spaces, work from heights, working with moving parts, precision cutting and welding, pick-and-place, and hazardous material handling.

VR teleoperation for non-uniform tasks

Stocking shelves isn’t as high stakes as bomb defusal, but a VR-controlled robot can do it. In 2022, Telexistence announced it would install “AI restocking robots” in 300 FamilyMart stores across Japan. The TX SCARA, which relies on AI to know when/where to place products, can work at a pace of up to 1,000 bottles per day, saving three hours of human work per store everyday. VR comes into play when issues arise and an employee needs to take over.

IHMC’s VR-humanoid robot system (above) is another example of VR supervision, as is AMAS: Last year, Mitsubishi Electric adopted Extend Robotics’ Advanced Mechanics Assistance System (AMAS) to make it easier to oversee its Melfa industrial robots and support “error recovery.”

Traditionally, industrial robots in single-purpose manufacturing setups require heavy operator involvement for non-uniform tasks. AMAS uses XR so non-robotics experts can use gestures to control robots and intervene when there’s a problem like when a robot fails to complete a pre-programmed task.

When an operator can quickly “log in” to a robot to, say, pick up a dropped item and oversee multiple sites at once, manufacturers are able to use robots in less structured workflows and get more value from their existing robotics investments.

VR teleoperation for telecommuting

Way back in the Oculus Rift days, MIT researchers proposed a VR-humanoid robot solution for manufacturing workers to one day work remotely as easily as the rest of us. Remote work is evolving; VR teleoperation could help address the blue-collar skills gap by essentially gamifying jobs for younger workers (making them more attractive) and opening positions to less traditional, currently overlooked candidates.

At DAWN Robot Cafe in Tokyo, the waiters are robots remotely controlled by people with disabilities. These individuals are able to interact with customers, take orders, deliver food, etc. all via robot, with input methods varying depending on physical abilities.

In addition to expanding the talent pool, VR-controlled robots could help improve collaboration between in-person and remote workers. In 2023, researchers at Brown and Cornell Universities presented VRoxy, experimental software allowing a person wearing a Meta Quest Pro to virtually transport to a real office, lab, or other workspace and have a physical presence at that location as a humanoid robot.

It’s essentially working in person by robot proxy: You, the remote worker, sees through the robot’s eyes. You move through space using natural movements (the robot moves in step), and collaborate with colleagues via gestures (e.g. pointing, nodding) and even facial expressions. The robot recreates every movement.

What if your room is considerably smaller than the physical workspace? An innovative mapping technique addresses this: In your virtual view of the real environment, blue circles on the ground serve as teleport links to account for the discrepancy between the spaces. Stepping on a circle might transport you to the other side of the room or even another building, though you only take one real step. Once in a designated “task area,” you once again see a live feed of the physical workplace and can move around and interact with others.

At the 2024 Venice Biennale, Italian inventors demonstrated a similar experience by proxy enriched with haptics. Wearing a VR headset, haptic suit and haptic feedback gloves, a person controlling the humanoid iCub 3 robot was able to see and feel things remotely.

iCub 3 has 54 points of articulation, cameras for eyes, and sensors all over its body that “pick up” sensations which are then replicated for the human operator. When the operator reacts, the VR system “picks up” the movements for the robot to match, creating a kind of feedback loop.

ROBOTS’ ROLE IN CREATING 3D ENVIRONMENTS

Lastly, VR can be used to collect data and scan spaces with robots. BMW is using Boston Dynamics’ Spot Robot for this purpose, to collect IoT data for a more complete view of operations at its Hams Hall plant.

A few years ago, the UK plant realized it had an oversight issue: Its production processes generated vast amounts of data that typically ended up siloed and, as a result, internal teams were using more than 400 custom dashboards. Thus began an effort to create a digital twin of the entire plant that would serve as a single source of truth for everyone.

Rather than install IoT sensors on equipment (takes years and a lot of money), BMW combined VR and Spot, a mobile robot easily operated by student interns and capable of covering the same amount of area. Spot helped fill in data gaps needed for the digital twin.

Spot, for instance, has a thermal camera to get temperature data for all the plant’s assets, an acoustic sensor to identify air leaks, and 360-degree camera technology for 3D scanning. Outfitted with a variety of sensors, robots like Spot could collect all kinds of information, not only for digital twins but for virtual spaces, as well.

CONCLUSION

From critical surgery to stocking shelves and scanning factories, the combination of VR and robotics has wide applications. Here and there, companies and researchers are figuring out how to combine the two emerging technologies to get the most value–watch this space!

Image source: JR West